How Hadoop handles Data Processing

Introduction

In 2006, Yahoo! embarked on a groundbreaking endeavor, birthing their own rendition of a distributed processing framework known as Hadoop that was meticulously crafted entirely in JAVA. The genesis of its name, "Hadoop," offers a whimsical insight into its origins, stemming from the toy elephant of Doug Cutting's son, one of the creators of Hadoop.

The two foundational components of Hadoop - HDFS (Hadoop Distributed File System) and MapReduce - ushered in a new era of data processing prowess. As we delve into HDFS & MapReduce, we uncover a paradigm shift in architecture that is different from the structures observed in Google’s take on file storage and processing in 2003. Let’s embark on a journey through Hadoop, unraveling how it takes a new approach to data retrieval and processing.

HDFS

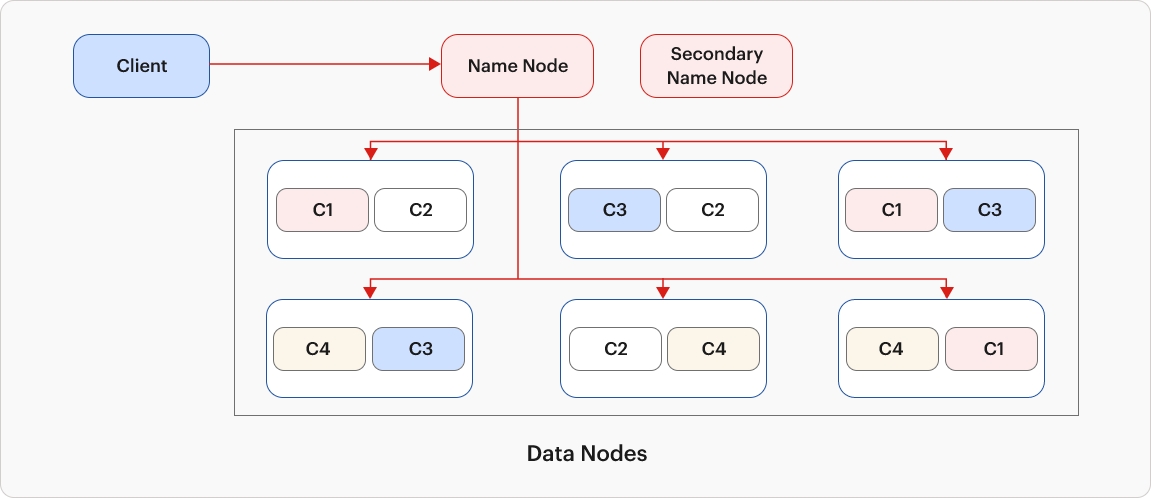

In HDFS, the architecture features Name Nodes, Secondary Name Nodes, and Data Nodes instead of the Master, Shadow Master, and Chunk Server as in GFS. The data retrieval process remains consistent across GFS and HDFS. Initially, the client connects to the Name Node, requesting data or a file. The Name Node, equipped with a metadata table detailing file locations on Data Nodes, responds to the client's inquiry. Subsequently, the client accesses the data from the respective Data Node.

Did you know ?

Difference in nomenclature between

| GFS | HDFS |

|---|---|

| Master | Name node |

| Shadow master | 2ndary name node |

| Chunk server | Data node |

| Chunk | Block |

| C++ | Java |

Additionally, HDFS operates with a default replication factor of 3, employing blocks of 128 MB each. Periodically, the Name Node actively monitors the vitality of Data Nodes through regular heartbeat checks.

MapReduce with Hadoop

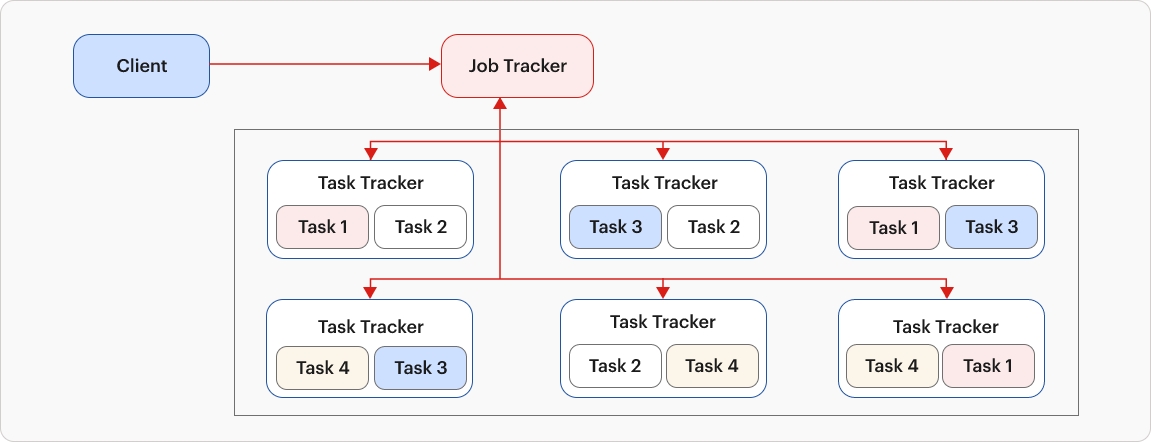

Hadoop's implementation of MapReduce is significantly different from Google's MapReduce. Hadoop utilizes a Job Tracker within the NameNode to oversee ongoing tasks and coordinate the scheduling of new tasks. Each DataNode hosts a Task Tracker, responsible for transmitting the status of the currently executing task to the Job Tracker. Moreover, processing occurs within Java Virtual Machines (JVMs) as a runtime environment. This architecture is depicted below.

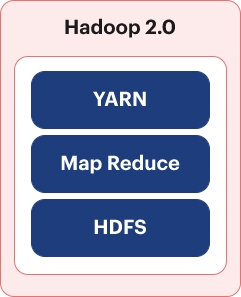

Hadoop 2.0

Introduced in 2015, Hadoop 2.0 marked a significant milestone with the integration of YARN (Yet Another Resource Negotiator) to tackle the strain on the JobTracker in Hadoop 1.0. The Job Tracker bore the brunt of scheduling and monitoring tasks, while the TaskTracker simply oversaw the execution of mappers and reducers locally and reported back to the JobTracker. As cluster sizes expanded beyond 4,000 DataNodes, the JobTracker began to encounter bottlenecks.

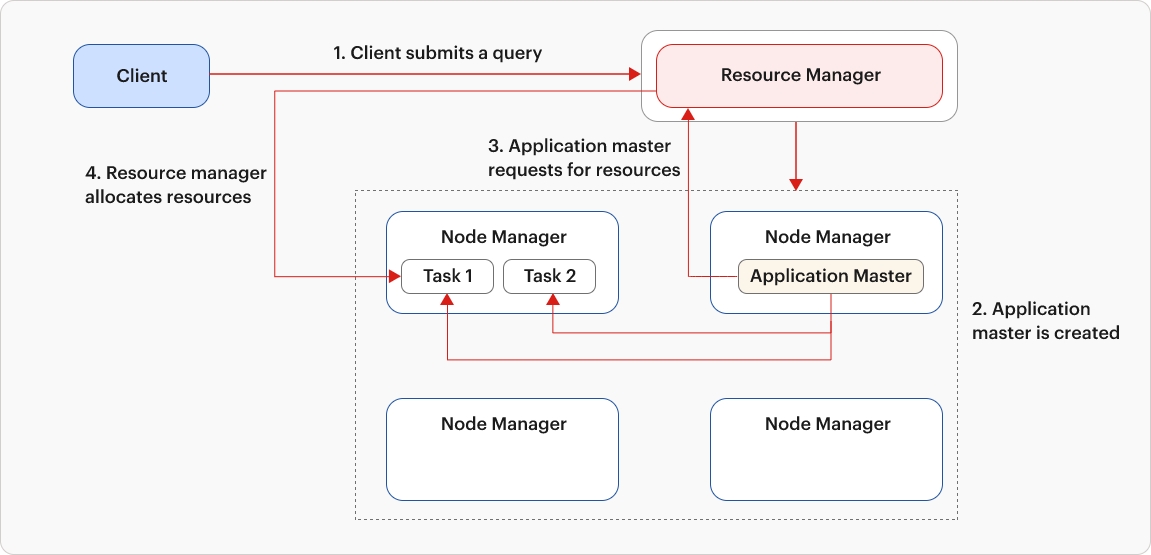

YARN solves this issue with three key components: the Resource Manager, Node Manager, and Application Master. In Hadoop 2.0, monitoring responsibilities were removed from the JobTracker, leaving it solely responsible for scheduling tasks. Consequently, the JobTracker was rebranded as the Resource Manager, while the TaskTracker was renamed the Node Manager.

When a client submits a request to the Resource Manager, it initiates the creation of a container in one of the Node Managers. Within this container, the Application Master is created.

Subsequently, the Application Master communicates with the Resource Manager to request the necessary resources for executing the tasks. Upon allocation of the container by the Resource Manager, the Container ID and Node Manager information are conveyed to the Application Master. The Application Master then proceeds to the designated Node Manager to execute the tasks within the allocated containers. Throughout this process, the Application Master assumes responsibility for monitoring the tasks until their completion. In the event of task failure, it is the duty of the Application Master to facilitate their re-execution.

Conclusion

The narrative of Hadoop transcends mere technological innovation; it encapsulates a saga of ingenuity and adaptation. From its humble beginnings in 2006 to the monumental strides witnessed in Hadoop 2.0, this distributed processing framework has evolved to surmount challenges and redefine data processing efficiency. As we bid adieu to the era of JobTrackers and embrace the dawn of Resource Managers and Node Managers, one thing remains abundantly clear: the legacy of Hadoop persists as a testament to ingenuity. So, let us continue our journey of exploration and innovation, as we navigate the ever-expanding horizons of data-processing with our blog on Apache Spark, Apache 'Spark'ing innovation in Data Processing optimization!

About the Author

Ashish Chouhan, an Associate Software Development Engineer at Sigmoid, is a budding data engineer in the industry. His experience includes distributed computing systems such as Apache Spark, cloud-based platforms like Azure and AWS, and various data warehousing solutions. He is passionate about architecting and refining data pipelines, and shows great interest to apply his knowledge to projects that require robust data engineering solutions.