Why Apache Arrow is the future for open source columnar

Reading Time: 4 minutes

Apache Arrow is an example of open source technology and is a de facto standard for columnar in-memory analytics. Engineers from across the top level Apache projects are contributing towards creating Apache Arrow. In the coming years we can expect all the big data platforms adopting Apache Arrow as its columnar in-memory layer.

What can we expect from an in-memory system like Apache Arrow:

- Columnar: Over the past few years, big data is all about columnar. It was primarily inspired by the creation and adoption of Apache Parquet and other columnar data storage technologies.

- In-memory: SAP HANA was the first one to accelerate the analytical workloads with its in-memory component and then Apache Spark brought open source technology to limelight which accelerates the workloads by holding the data in memory.

- Complex data and dynamic schemas: Solving business problems are much easier when we represent the data through hierarchical and nested data structures. This was the primary reason for the adoption of JSON and document based databases.

At this point most of the systems out there hardly support two of the above concepts. Many exist which support one of them. That’s where the Apache Arrow kicks in, which supports all three of them seamlessly.

Arrow is being designed in a way to support complex data and dynamic schema and in terms of performance, it is totally based on in-memory and is columnar storage.

The bottleneck with any typical system comes when the data is moved across machines, Serialization is an overhead in many cases, Arrow improves the performance for the data movement within a cluster without any serialization or deserialization.

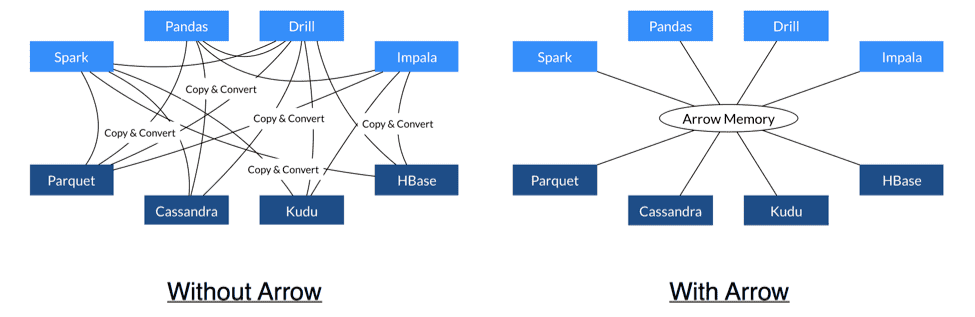

Another important aspect of Arrow is when two systems use Arrow as their in-memory storage, for example Kudu could send Arrow data to Impala for analytics purposes since both of them are Arrow-enabled without involving any costly deserialization on the receipt. Inter Process Communication is mostly happening through shared memory, TCP/IP and RDMA with Arrow. It also supports a wide variety of data types which includes both the SQL and JSON types, such as Int, BigInt, Decimal, VarChar, Map, Struct and Array.

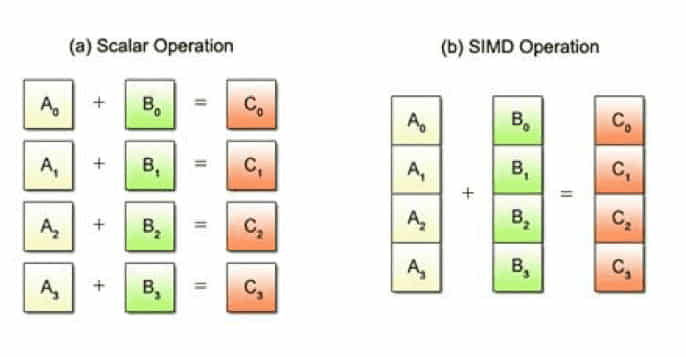

Nobody wants to wait longer to get their answers from the data. The faster they gets the answer the faster they can ask other questions or solve their business problems. CPUs these days become faster and more sophisticated in design, the key challenge in any system is making sure the CPU Utilization is at ~100% and is using it efficiently. When the data is in columnar structure, it is much easier to use SIMD instructions over it.

SIMD is short for Single Instruction/Multiple Data, while the term SIMD operations refers to a computing method that enables processing of multiple data with a single instruction. In contrast, the conventional sequential approach using one instruction to process each individual data is called scalar operations. In some cases, when using AVX instructions, these optimizations can increase performance by two orders of magnitude.

Arrow is designed to maximize the cache locality, pipelining and SIMD instructions. Cache locality, pipelining and super-word operations frequently provide 10-100x faster execution performance. Since many analytical workloads are CPU bound, these benefits translate into dramatic end-user performance gains. These gains result in faster answers and higher levels of user concurrency.

About the Author

Akhil, a Software Developer at Sigmoid focuses on distributed computing, big data analytics, scaling and optimising performance.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI