Microservices-based architecture: Key to scaling enterprise ML models

Reading Time: 5 minutes

The last decade has seen wholesome innovations in the field of artificial intelligence and machine learning (AI/ML). Today, progressive enterprises are increasingly leveraging ML models to drive operational growth through actionable decision-making. In the ML space, an important area of evolution has been MLOps – a set of practices that help companies synergize ML, DevOps, and data engineering to seamlessly deploy ML models and reliably maintain ML systems.

Even when ML has gained significant popularity in helping organizations address operational gaps, there’s still a challenge when it comes to deploying ML models to the production environment. The major obstacle that companies face here is the constantly changing training data sets. Using prebuilt offline models to develop practical applications can often turn out to be a tricky affair as organizational data is always evolving. In order to add new data sets to an existing application, data teams need to rebuild data models from the scratch, freshly compute scores and deploy new models without impacting the production environment. It is here where a microservices architecture can serve as a natural solution enabler helping companies achieve seamless data integration without disrupting the existing production environment.

Microservices architecture can serve as a natural solution enabler helping companies achieve seamless data integration without disrupting the existing production environment. In this blog we will discuss how microservice deployment can help companies with productionizing ML models.

Microservices: Helping Companies Efficiently Deploy, Productionize, and Scale ML Models

Research reveals that there has been a tremendous increase in microservices deployment, and the market is expected to grow exponentially over the next decade. The global market of microservices architecture is projected to be worth $8.07 billion by 2026, up from $2.07 in 2018, growing at a healthy CAGR of 18.6%. With both the microservices architecture and MLOps on ascendancy, combining the concepts promise to bring ML success to enterprises that are envisioning a future that relies on fast application deployment, seamless operations, and agile development.

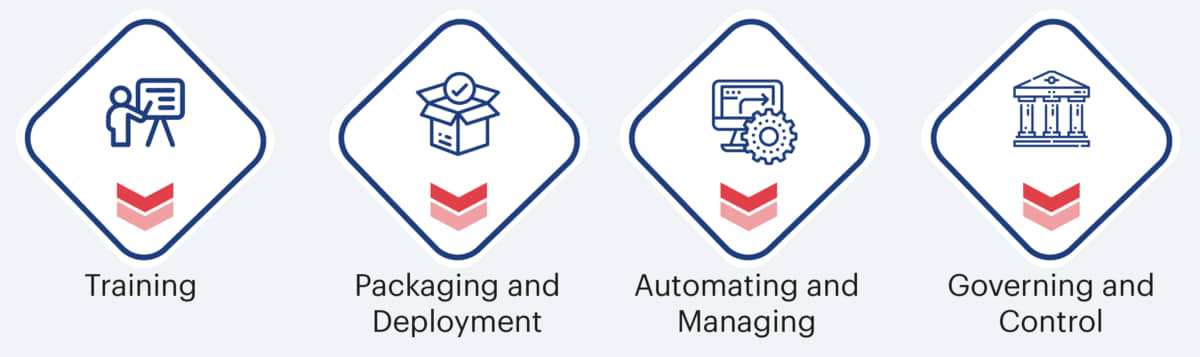

The architecture to develop and deploy machine learning models has a telling impact on its success. The ML model lifecycle ideally has four key components, which are:

The microservices-based architecture helps create a robust ML model lifecycle, which ensures mitigation of challenges arising from underutilization and mismanagement of data scientists and data engineers, fragmented communication, and siloed operations. Also, microservices-based architectures make ML models more intuitive for the users, thereby increasing productivity and improving the customer experience.

Another key element of microservices-driven MLOps is going serverless, which is crucial for automating container development, auto-scaling of workflow, and improved visibility and monitoring. To build automated ML pipelines, implementing serverless frameworks and utilizing an open-source tool, such as Kubeflow, is essential. By using Kubeflow pipelines and serverless frameworks, such as MLRun or Nuclio, enterprises can quickly develop and deploy automated ML pipelines without the need to overtly focus on DevOps.

Productionizing ML models has distinctive benefits, and a microservices-based architecture facilitates a product-oriented development and deployment of the ML model and offers end-to-end visibility of the process. This approach helps in making the development process both interactive and iterative, resulting in creation of robust, automated, CI/CD-based ML pipelines. In other words, ML pipeline architecture and Kubeflow pipeline are crucial for productionizing ML models and unlocking the complete value of MLOps.

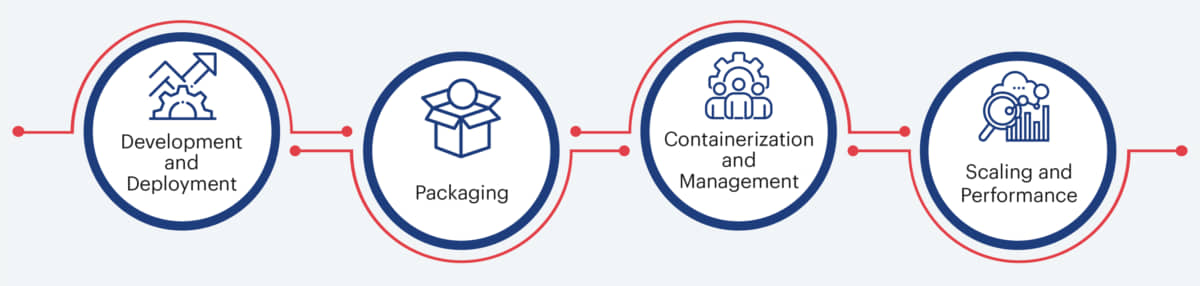

The following are some of the best practices when it comes to facilitating successful MLOps through a microservices architecture:

- Development and Deployment: One of the key aspects that define the success of any ML application is how easily the ML models can be developed and deployed. A common approach to ensure that development and deployment of models happen without disrupting the production environment, is to leverage a robust training dataset to create an optimized model offline. This model can then be validated offline against a pre-defined validation dataset and then uploaded to the microservices architecture. Teams can use the main messaging hub in microservices architecture to collate critical feature data from multiple disparate sources on a real-time basis.

- Packaging: Microservices architecture can allow developers to both publish a single ML capability as a standalone microservice or publish an independent application functionality as a microservice with ML capability. In both the cases however, the ML component needs to be managed separately because of complexities associated with processes like data acquisition, model training/development, model update and so on.

- Containerization and Management: In order to integrate the ML algorithm into the delivery IT platform, there are two major steps that needs to be followed. Firstly, REST API has to be integrated with the ML algorithm running behind a particular microservice. The API, run time and algorithm has to be packed in a Docker container. Secondly, all the containers (microservices) will have to be effectively managed and orchestrated. It is here where Kubernetes can help create a difference. Kubernetes can help teams properly categorize containers into compute clusters and ensure that workloads are managed as planned.

- Scaling and Performance: Since cloud and containers serve as the backbone of microservices, scalability and performance become an integral aspect. While selecting and developing different application components, developers should carefully select and finalize databases, runtimes, computing engines and storage on the basis of desired performance and scalability.

What differentiates Microservices Architecture?

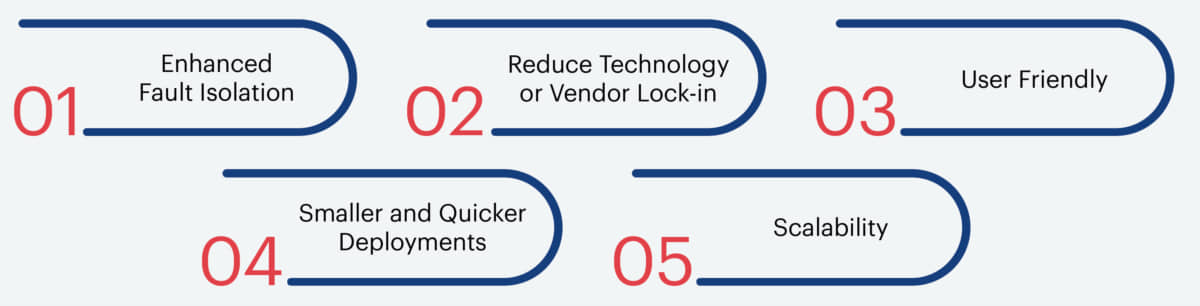

There are several aspects that make microservices architecture an ideal option for big enterprises. When compared to more conventional monolithic architectures, microservices delivers:

- Enhanced Fault Isolation: The failure of a single module within microservices doesn’t affect the larger application.

Reduce Technology or Vendor Lock-in: Microservices provides with the much-needed flexibility to implement a new technology stack within an individual service on the basis of the requirement. - User Friendly: The microservices architecture is user-friendly which helps developers clearly understand service functionality.

- Smaller and Quicker Deployments: The presence of smaller code bases in microservices allows for faster deployments.

- Scalability: Services are separately stacked in a microservices architecture, which allows enterprises to scale them on individually on the basis of requirements.

Conclusion

Microservices architecture is paramount to enterprise aspirations of creating a robust organizational MLOps environment. It revolutionizes how data is ingested and validated, creates an agile environment for development and deployment, and adds another level of cybersecurity to the entire process. Furthermore, it ensures continued integration and delivery, thereby remarkably reducing time-to-value. With so much to offer, enterprises must quickly turn to microservices deployment from traditional architectures and aim at optimizing MLOps to gain business advantage and create definitive advantages.

About the author

Shreya is a Data Engineer at Sigmoid who currently works on building Query Engine and ETL pipelines on Spark, BigQuery and was involved in migration of Sigview from monolith to microservices.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI