How to use MLOps for an effective AI strategy

Reading Time: 5 minutes

87% of machine learning projects fail to make it into production. Deploying ML models in business use cases involves working around several data and engineering bottlenecks that impede the implementation process. In fact, ML teams spend a quarter of their time trying to develop the infrastructure needed for ML modeling.

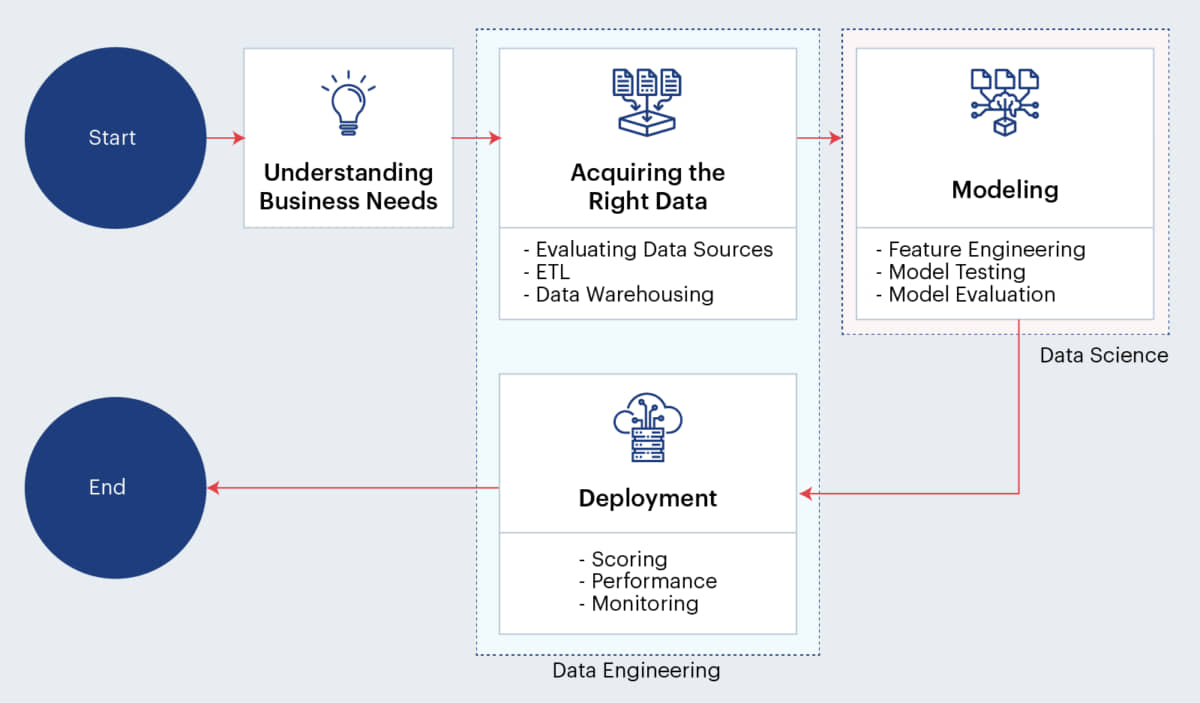

The Machine Learning Lifecycle

(Adapted from Microsoft’s – Data Science Lifecycle)

In one of our previous articles, we have discussed in detail the multiple reasons why such a high number of ML initiatives fail to make it to the production phase. The need to deal with these challenges and other smaller nuances of deploying ML models has given rise to the relatively new concept of MLOps.

What is MLOps

MLOps – a set of best practices aimed at automating the ML lifecycle – brings together the ML system development and ML system operations. An amalgamation of DevOps, machine learning, and data engineering, MLOps simplifies machine learning deployment issues in diverse business scenarios by establishing ML as an engineering discipline. It brings about several machine learning benefits to otherwise challenging scenarios of ML deployment.

Businesses can leverage MLOps to craft a definitive process for driving tangible outcomes through ML. One of the most prominent reasons behind the rising popularity of MLOps is its ability to bridge the expertise gap between business and data teams. Moreover, the wide-spread adoption of ML has had an impact on the evolution of the regulatory landscape. As this effect continues to grow, MLOps will help enterprises handle the bulk of regulatory compliance without impacting data practices.

And finally, the collaborative expertise between data and operations teams allows MLOps to bypass the bottlenecks that exist in the deployment process. And as we explore further, we will see how MLOps tightens the loop and irons out the creases in the machine learning system design and implementation framework.

MLOps framework for success

Since MLOps is a nascent field, it can be difficult to get a grasp of what it entails and its requirements. One of the foremost challenges in implementing MLOps is the difficulty in superimposing DevOps practices on ML pipelines. This is primarily due to the fundamental difference: DevOps deals with code, whereas ML is code and data. And when it comes to data, unpredictability is always a major concern.

Since code and data evolve independently and in parallel, the resulting disconnect causes ML production models to be slow and often inconsistent. Moreover, applying a simple CI/CD approach may not be possible due to a lack of reproducibility of an immense volume of data which is difficult to track and version. Therefore, for machine learning in production, it is crucial to adopt a CI/CD/CT (continuous training) approach.

Exploring the ML pipeline (CI/CD/CT)

Data teams need to look at MLOps simply as a code artefact that stands independent of individual data instances. This is why breaking it up into two distinct pipelines (training pipeline and serving pipeline) can help ensure a safe run environment for batch files as well as an effective test cycle.

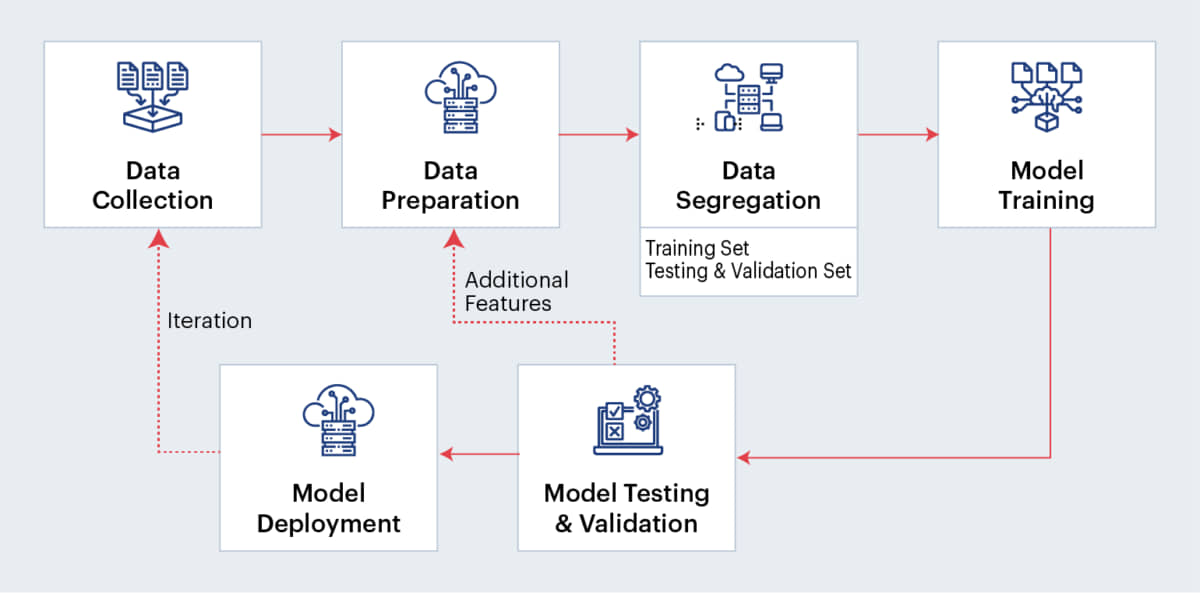

The training pipeline involves the entire model preparation process which starts with collecting and preparing data. Once the data is collected, validated, and prepared, data scientists need to implement feature engineering to assign data values for training as well as in production. At the same time, an algorithm has to be chosen that will define how the model identifies data patterns. Once this is done, the model can start training based on historical offline data. The trained model can then be evaluated and validated before being deployed through a model registry to the production pipeline.

A schematic representation of the complete model preparation process

The production pipeline involves using the deployed model to generate predictions based on online or real-world data sets. This is where the CI/CD/CT approach comes to a full cycle through pipeline automation. The data is collected from the endpoint and enriched with additional data from the features store. This is followed by the automated process of data preparation, model training, evaluation, validation, and eventually generating predictions. Some of the components that fortify this automation process includes metadata management, pipeline trigger, feature store, and independent data and model versioning.

Once the model is deployed in the pipeline, the resulting data can then be used to continuously train models in the training pipeline. By doing so, it closes the data/code loops and simplifies the deployment process.

Building the right team

The MLOps team should ideally include members from the operations, IT and data science division. An enterprise leader with experience in operationalizing machine learning should lead this team.

As far as aligning the team is concerned, MLOps teams can either be aligned with the enterprise architecture team under IT or it can also be integrated with the central analytics or data science team. Depending on the requirement, MLOps teams can work with specific business units together with Data Science or IT teams to manage complex models.

The MLOps team leaders need to clearly define roles for tasks such as data preparation, training ML models, deploying ML models and so on.

Advantages of MLOps & the way forward

The foremost benefit of leveraging MLOps is the rapid, innovative ML lifecycle management. MLOps solutions make it easier for data teams to collaborate with IT engineers and increase the speed of model development. Moreover, the provision to monitor, validate, and manage systems for machine learning models expedites the deployment process.

Besides saving time through rapid automated workflows, MLOps supports the optimization and reusability of resources. By leveraging MLOps, IT teams can create a self-learning model that can accommodate data drifts in the long run.

The quick rise of MLOps indicates a future where we will see it evolving into a competitive necessity. As machine learning benefits are explored in research as well as industry use cases, there is a need to keep at par with the agility of modern business models and adapt to changing circumstances. While that is still some way into the future, enterprises will need to act now to grab the opportunity when it arises.

Also, read our blog on the 5 best practices to put ML models in production.

About the author

Anwar is a business transformation manager at Sigmoid. For nearly a decade, he has led development and deployment of scalable AI solutions for clients in various industry domains helping them advance their analytics journey.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI