The GenAI adoption triad: Responsibility, Ethics, and Explainability

Reading Time: 5 minutes

Generative AI is no longer just a tech experiment; it is shaping boardroom strategy, powering marketing campaigns, optimising supply chains, and redefining customer engagement. The potential is undeniable—faster decision-making, smarter operations, and new ways of creating business value.

But here’s the catch: potential alone isn’t enough to drive enterprise adoption of generative AI at scale. What truly determines success is trust.

That trust doesn’t happen by default. It needs to be intentionally built into the design and deployment of every GenAI system across the lifecycle stages. At the heart of this trust-building endeavour are three core principles: Responsibility, Ethics , and Explainability. These are not optional ideals, but essential pillars for confident and sustainable adoption of generative AI.

Responsibility: Putting guardrails for accountability

As more teams integrate GenAI to automate tasks or support decisions, a critical question emerges: “Are we in control of what this system is doing?” Hallucinated outputs, exposure of sensitive data, and untraceable decisions can quickly spiral into risks that are difficult to manage once GenAI is in production.

Responsibility means being deliberate about where and how GenAI is applied. It requires understanding of the risk landscape and setting up robust structures for AI risk management. For enterprises, this includes choosing the right (compliant) data, creating the right governance mechanisms, implementing continuous validation processes, and ensuring there is always human accountability for outcomes.

Key elements of responsible AI include:

- Fairness, security and privacy-preserving measures

- Governance and continuous improvement mechanisms

- Training on curated, compliant data that reflects organizational values

In practice, this might mean deploying human-in-the-loop checks for high-impact use cases, rigorous validation mechanisms or running scenario testing before deployment and proactively managing data lineage. Staying aligned with FAIR principles (Findable, Accessible, Interoperable, and Reusable) ensure that AI outputs are traceable and reproducible.

By embedding responsible AI solutions or frameworks early, enterprises can reduce risks while building the foundation for confident scaling.

Ethics: Defining what is acceptable

GenAI doesn’t come with an ethical compass. When left unchecked, it can amplify biases, produce stereotypes, and generate unsafe or misleading content. This makes it vital for organizations to design AI systems that are ethical by intent right from the start.

Ethical deployment of GenAI should focus on:

- Reducing bias in training data and model outputs

- Respecting copyright and intellectual property when generating or reusing publicly available data

- Avoiding over-reliance on GenAI for sensitive decisions that can impact others

This becomes even more important as GenAI starts contributing to high-stakes outputs like product claims, medical recommendations, or policy decisions. In these cases, even a small oversight could lead to reputational damage or regulatory challenges.

Ethics isn’t one-size-fits-all. What’s considered ethical can vary by context and geography. Enterprises need to develop customized frameworks that involve diverse stakeholders, including legal, compliance, product, and data science teams.

For example, when generating marketing content or product descriptions, businesses should have filters in place to flag and prevent content that might be offensive, misleading, or discriminatory. Where needed, ethical review boards or model governance councils can formalize this process.

Ethical alignment should never be treated as a checkbox exercise. Instead, it must be a shared practice and consistently applied across business teams for responsible AI governance.

Explainability: Trust begins with understanding

Of all the three pillars, explainability is arguably the most critical and often the most overlooked. As enterprises progress in their Generative AI adoption journey, they are shifting from using GenAI for content generation to relying on it for decision-making.

Whether it’s a pricing recommendation, a chatbot suggesting next-best actions, or an AI model prioritizing sales leads, these decisions carry real business consequences. To act on them, users need to understand not just the what, but the why behind each output.

Explainability isn’t about exposing every layer of a neural network. It is about making the rationale behind a system’s decision clear and traceable so that business users can trust its output.

This involves:

- Showing the logic path or inputs to arrive at a recommendation

- Highlighting confidence scores and accuracy thresholds

- Providing consistent outputs for consistent inputs/questions, especially in regulatory or high-impact environments

Lets take a simple example: if a GenAI assistant suggests discontinuing a product line based on sales forecasts, users should be able to trace that decision back to the underlying data and assumptions. If the same prompt is run again with the same data, the result should remain consistent. This is how trust in automation is built.

Explainability also underpins effective human-AI collaboration. In hybrid environments, where humans and GenAI agents work together, the ability to interrogate and understand AI decisions ensures oversight and accountability. As AI models become more autonomous, explainability will become the go/no-go filter for enterprise adoption of GenAI.

Responsibility, Ethics, and Explainability form the foundation of successful Generative AI adoption.

Responsibility provides governance, ethics defines boundaries, and explainability builds trust. This triad empowers enterprises to scale GenAI safely, transparently, and with lasting business impact.

How Sigmoid enables confident scaling of GenAI across the enterprise

The real challenge in scaling GenAI isn’t in building powerful models; it is in ensuring those models behave as your business expects. At Sigmoid, we embed trust into every layer of the GenAI lifecycle, not just the model itself. Our approach starts with curated, secure, and bias-resistant datasets that reflect real-world diversity. Because responsible AI begins long before the model is trained.

We design models for explainability from the start. Whether it’s a pricing suggestion or product insight, our systems are built to show not just the answer, but the reasoning behind it, which enables business users to act with clarity and confidence.

But AI doesn’t stop learning at launch. Our continuous monitoring pipelines ensure that the AI models stay reliable, accurate, and aligned with evolving needs. Recognizing that every enterprise has unique ethical and regulatory considerations, we customize safeguards, from content moderation to role-based permissions, that matches each client’s context. That’s why we work closely with stakeholders to tailor AI behaviour—what content is appropriate, how decisions are communicated, how outputs are moderated, and where human intervention is necessary.

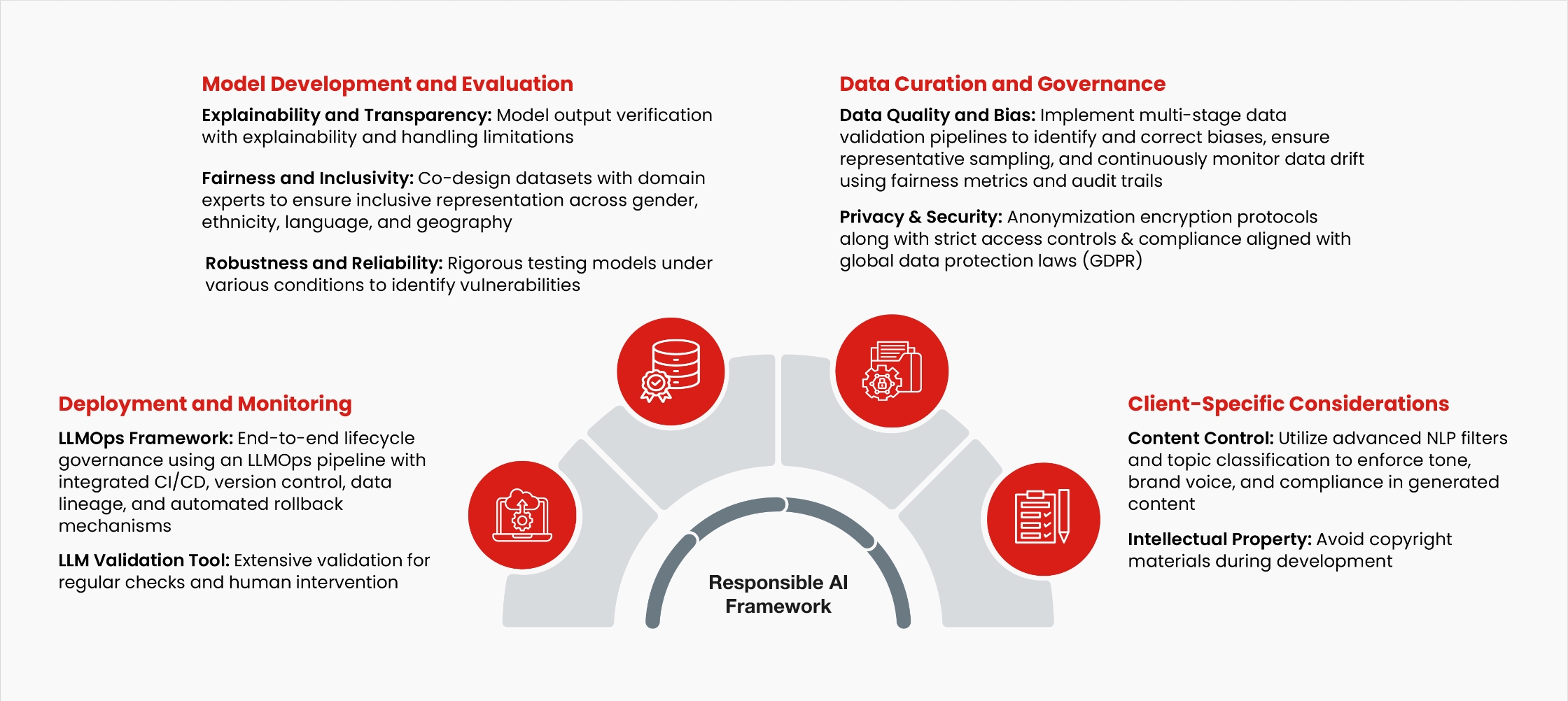

Fig.1. Sigmoid’s Responsible and Ethical AI framework

This comprehensive methodology comes together in our responsible AI framework, which integrates best practices for data curation, model development, deployment, and governance. Our approach ensures enterprises can scale GenAI transparently. Ultimately, the success of GenAI doesn’t just depend on how intelligent it is, but on how much your people can trust it.

About the author

Debapriya Das is Director of Data Science at Sigmoid and leads the Generative AI Centre of Excellence. With over 14 years of experience, he has delivered transformative AI and analytics solutions for Fortune 500 brands across CPG, retail, e-commerce, insurance, and media. A ‘40 Under 40 Innovator in Data Science’, Debapriya specializes in GenAI, NLP, image analytics, and IoT. He drives innovation through accelerators, campaign optimization engines, recommendation engines, data strategy and advanced R&D tools that shapes how businesses make decisions with data and technology.

Featured blogs

Subscribe to get latest insights

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI

Featured blogs

Talk to our experts

Get the best ROI with Sigmoid’s services in data engineering and AI